-

IMPACTS are evalutated in every assessment item. read the task sheet for each IA for the explicit impacts to target. in IA1 and IA2 it will be personal, social and economic. IA3 has a whole section on impacts (part 3). either way:

- Personal - impacts on you the author, developer, resident, community member, digital citizen

- Social - impacts on society (good / bad)

- Economic - $$$ profit / loss

- ethical (e.g., moral), legal (e.g., legislation compliance, defamation, etc.) and sustainability impacts (think green)

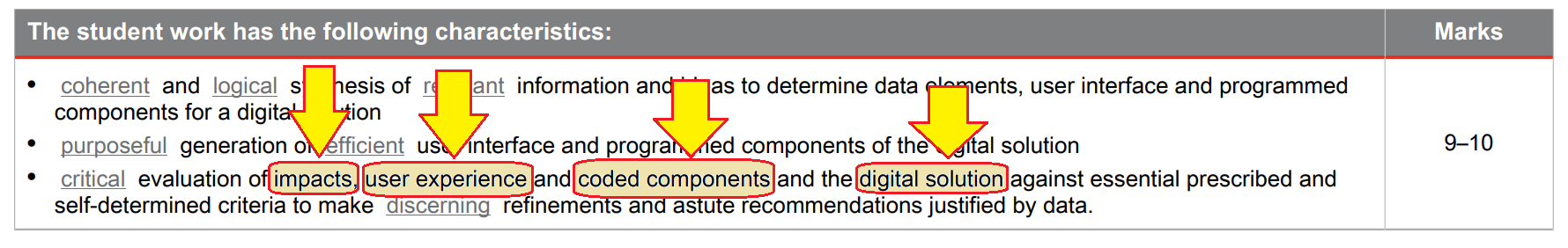

Evaluation

Figure out what you actually have to evaluate with the P&SD criteria first:

Knowing this, make sure you have read the IA Task Sheet which tells you specifically what each of these things target on each IA.

Here is some additional notes. Click on each container to expand the notes:

-

COMPONENTS are evalutated in every assessment item, although in IA2 and IA3, these are CODED COMPONENTS. In terms of how this is different from evaluating the solution or prototypes, when evaluating components you are evaluating a selection of modular, individual, isolated 'components'. again, read the task sheet for more specific advice of components required by each task:

- try and incorporate some of the words from the MEASURES table below when writing criteria to measure COMPONENTS success. any measures using words like EFFICIENCY, FUNCTIONALITY, USABILITY, MODULARITY, SCALABILITY, or even WORST-CASE RUNTIME COMPLEXITY are great measures for components

- IA1 "COMPONENTS" (since there is no code in IA1) means data components, algorithm components and user interface components, which leans into the realm of IA2 user experience (see below)

- IA2 and IA3 "CODED COMPONENTS": what you coded. there will be overlap with algorithms, data components etc. (as per IA1), as design flaws here will flow through into your code.

| MEASURES | What to look for when evaluating in the negative... |

|---|---|

| Reliability | Look for reasons why a system will not satisfactorily perform the task for which it was designed or intended |

| Accuracy | Look for logical threats to keeping accurate data records |

| Maintainability | Look for problems with the ease and speed at which a system can maintain operational status, especially after fault or repair. In other words, can the system easily do what it's supposed to do without 'needless' human intervention. |

| Security | Look for security issues with data storage, broadcasting (i.e. encryption strength) or user authentication. Or maybe another security issue entirely.. you get the idea. This one is about unwanted, unauthorised or unauthenticated access to data. |

| Sustainability | Look for ways in which the system doesn’t support the needs of the present without compromising the ability of future generations to support their needs. Scalability can come into this - in the future, how will the system be available on a larger scale, with greater volumes of data and a more diverse community with a broader range of end user needs? |

| Efficiency | Efficiency seeks maximum productivity with the least / minimal consumption of system resources. Efficiency takes into account a lot of factors and criteria, such as reliability, speed and programming practises (the latter of which can also be measured by the criteria of scalability and modularity). So look for inefficiencies in the code, interchange format or DB design... |

| ... there are many more criteria you could choose from, both in and out of the Digital Solutions syllabus glossary. | |

- LOW-FIDELITY PROTOTYPES (IA1), DIGITAL SOLUTION (IA2), or DATA EXCHANGE SOLUTION (IA3) all mean (pretty much) the same thing, basically WHAT YOU GENERATED.

- Think of this as being different to COMPONENTS in terms of this is the SUM OF ALL COMPONENTS, i.e., the finished product (holistically).

- ☆☆☆ IA2 USER EXPERIENCE - accessibility, effectiveness, safety, utility and learnability. These can be tagged in user interface designs but are useful throughout generation (e.g., coding exception handling is an example of Safety).

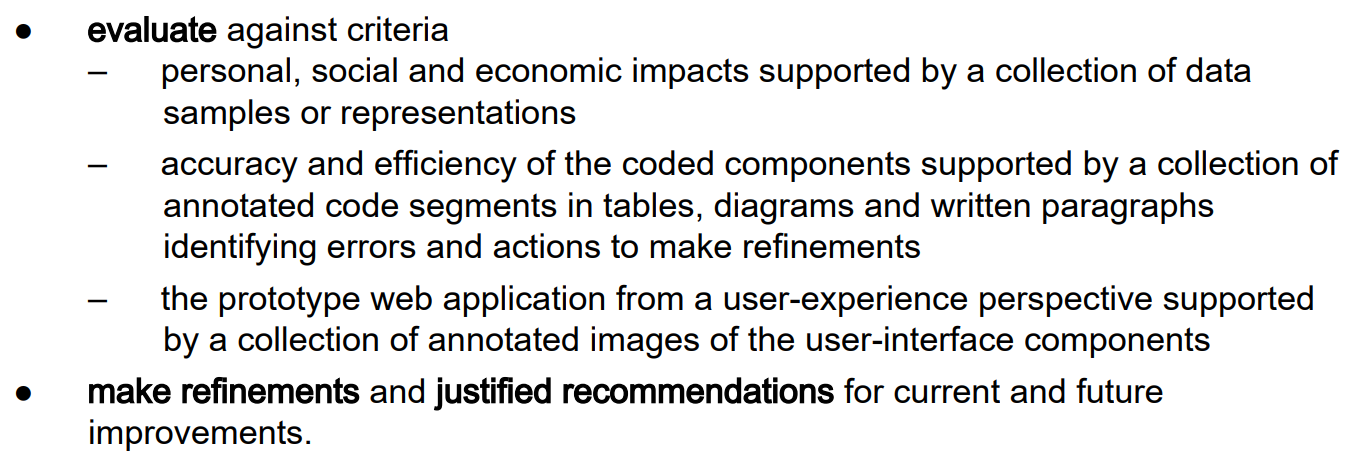

Once you have some idea what to evaluate, the difficult skill now will be to assess or judge your criteria based on evidence.

The first step (again) is to read the task sheet to make sure you know what you are doing. Here is an example from the IA2 task sheet:

So this means that Data for justification is more than just screen shots of your completed build (although these are good to evaluate UX). End user surveys that you have collated and summarised, code snippets or other data for justification may be used here. For other examples of testing, see the old Evaluation notes and scroll downwards.

Final point - refinements is things you already fixed or improved, recommendations is for things left for the future. Recommendations are easier - they haven't happened yet, but can still be justified. You should be able to produce some data for justification (somehow) for your refinements. Do this as best (however) you can, and take plenty of screen shots during your early build phases! (you'll hit a tonne of errors)

older evaluation notes